Apple's new iPhone 17e is shaping up to be a great midrange device, but how does it stack up against the base iPhone 17? [...]

Thu, Mar 05, 2026Source ZDNet – Big Data

With a new SDQ-Mini LED panel, TCL's X11L produces a picture on-par with some of the best OLED models from Sony and LG. [...]

Thu, Mar 05, 2026Source ZDNet – Big Data

A machine learning pipeline identifies low-frequency Raman signatures as reliable indicators of liquid-like ionic conduction in solid electrolytes for batteries. [...]

Wed, Mar 04, 2026Source Nanowerk

Methanol is a key starting material for chemical products. Researchers can now produce this precursor from CO2 and hydrogen with high efficiency by using isolated metal atoms as catalysts. [...]

Wed, Mar 04, 2026Source Nanowerk

Researchers have built the smallest OLED pixel ever made—just 300 nanometers across—without sacrificing brightness. By redesigning the pixel with a nano-sized optical antenna and a protective insulation layer, they prevented the short circuits that normally plague devices at this scale. The result is a stable, ultra-tiny light source that could [...]

Wed, Mar 04, 2026Source Science Daily

Researchers at Kobe University have developed an AI system that can detect acromegaly, a rare hormone disorder, by analyzing photos of the back of the hand and a clenched fist. The disease often develops slowly and can take years to diagnose, even though untreated cases may shorten life expectancy. [...]

Wed, Mar 04, 2026Source Science Daily

The transformational potential of AI is already well established. Enterprise use cases are building momentum and organizations are transitioning from pilot projects to AI in production. Companies are no longer just talking about AI; they are redirecting budgets and resources to make it happen. Many are already experimenting with agentic [...]

Wed, Mar 04, 2026Source Technology Review – AI

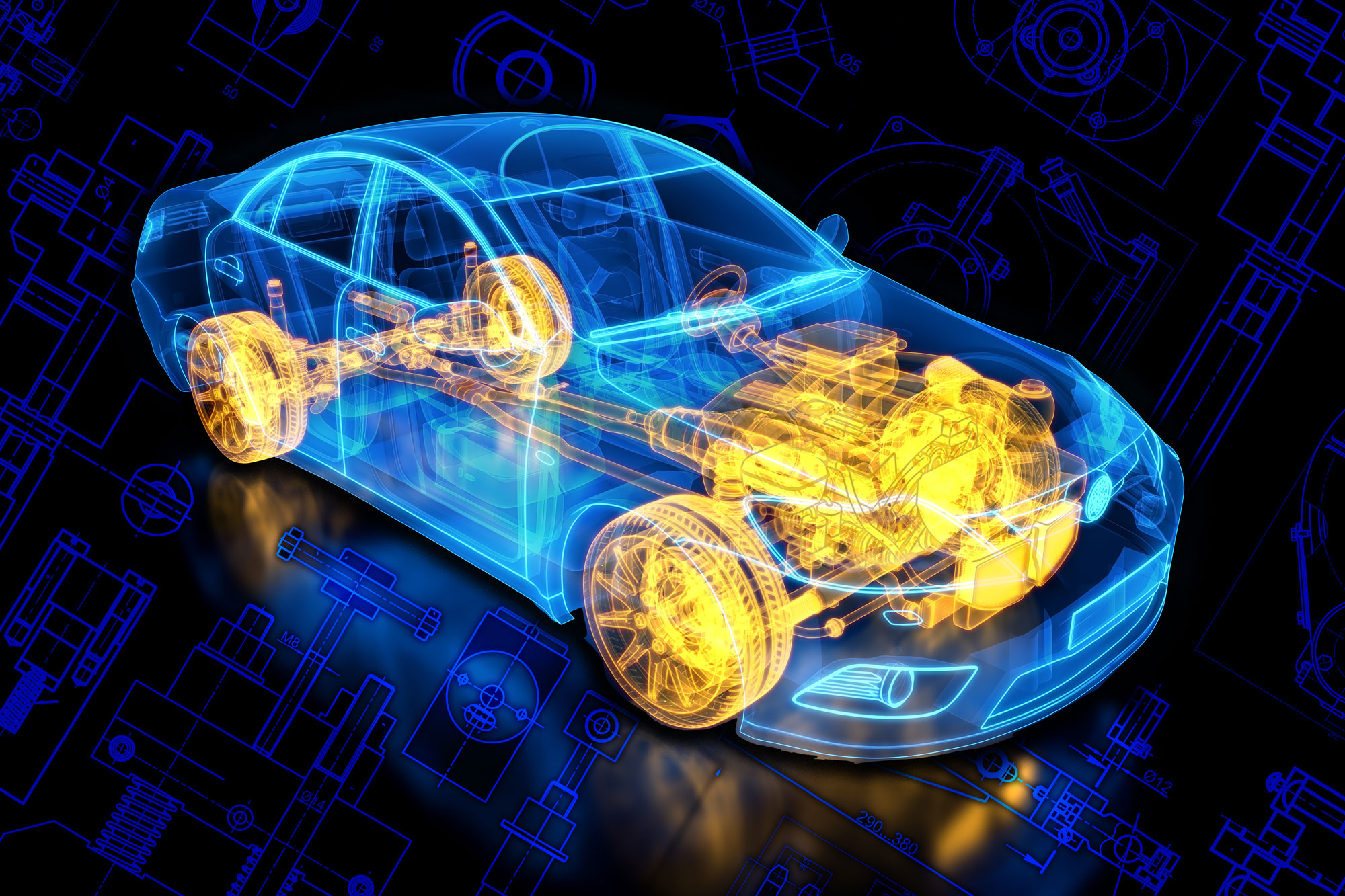

Many engineering challenges come down to the same headache — too many knobs to turn and too few chances to test them. Whether tuning a power grid or designing a safer vehicle, each evaluation can be costly, and there may be hundreds of variables that could matter.Consider car safety design. [...]

Wed, Mar 04, 2026Source MIT – AI

The Google Pixel 10a may not be the upgrade you expected, but it beats the more expensive Pixel 10 in key ways. [...]

Wed, Mar 04, 2026Source ZDNet – Big Data

BIOPIX, a retina-inspired biohybrid image sensor combining biological liquid environments with organic electronics to generate real-time images on a display. [...]

Tue, Mar 03, 2026Source Nanowerk

Researchers have discovered how tiny organisms break the laws of physics to swim faster. Such secrets of mesoscale physics and fluid dynamics can offer entirely new pathways for engineering and medicine. [...]

Tue, Mar 03, 2026Source Nanowerk

A famously resilient bacterium may be tough enough to survive one of the most violent events imaginable on Mars. In laboratory experiments designed to mimic the crushing shock of a massive asteroid impact, researchers squeezed Deinococcus radiodurans between steel plates and blasted it with pressures reaching 3 GPa (30,000 times [...]

Tue, Mar 03, 2026Source Science Daily

Astronomers using the James Webb Space Telescope have spotted the most distant “jellyfish galaxy” ever seen — a cosmic oddity streaming long, tentacle-like trails of gas and newborn stars as it speeds through a dense galaxy cluster. The galaxy appears as it was 8.5 billion years ago, revealing that the [...]

Tue, Mar 03, 2026Source Science Daily

At Mobile World Congress, Lenovo previewed a mix of new laptops and bold conceptual devices that push the boundaries of personal computing. [...]

Mon, Mar 02, 2026Source ZDNet – Big Data

Advanced AI tools fail to find a link between the physical structure of the brain and navigation ability, challenging decades of neuroscientific assumptions. [...]

Mon, Mar 02, 2026Source Neuroscience News – Deep Learning

On February 28, OpenAI announced it had reached a deal that will allow the US military to use its technologies in classified settings. CEO Sam Altman said the negotiations, which the company began pursuing only after the Pentagon’s public reprimand of Anthropic, were “definitely rushed.”

In its announcements, OpenAI took great [...]

Mon, Mar 02, 2026Source Technology Review – AI

As millions turn to ChatGPT and other AI chatbots for therapy-style advice, new research from Brown University raises a serious red flag: even when instructed to act like trained therapists, these systems routinely break core ethical standards of mental health care. In side-by-side evaluations with peer counselors and licensed psychologists, [...]

Mon, Mar 02, 2026Source Science Daily – Cybernetics

Pull the plug! Pull the plug! Stop the slop! Stop the slop! For a few hours this Saturday, February 28, I watched as a couple of hundred anti-AI protesters marched through London’s King’s Cross tech hub, home to the UK headquarters of OpenAI, Meta, and Google DeepMind, chanting slogans and [...]

Mon, Mar 02, 2026Source Technology Review – AI

During a summer internship at MIT Lincoln Laboratory, Ivy Mahncke, an undergraduate student of robotics engineering at Olin College of Engineering, took a hands-on approach to testing algorithms for underwater navigation. She first discovered her love for working with underwater robotics as an intern at the Woods Hole Oceanographic Institution [...]

Fri, Feb 27, 2026Source MIT – AI

Burrowed in the alleys of Hongik-dong, a hushed residential neighborhood in eastern Seoul, is a faded stone-tiled building stamped “Korea Baduk Association,” the governing body for professional Go. The game is an ancient one, with sacred stature in South Korea.

But inside the building, rooms once filled with the soft clatter [...]

Fri, Feb 27, 2026Source Technology Review – AI

Reasoning large language models (LLMs) are designed to solve complex problems by breaking them down into a series of smaller steps. These powerful models are particularly good at challenging tasks like advanced programming and multistep planning.But developing reasoning models demands an enormous amount of computation and energy due to inefficiencies [...]

Thu, Feb 26, 2026Source MIT – AI

Have you ever had an idea for something that looked cool, but wouldn’t work well in practice? When it comes to designing things like decor and personal accessories, generative artificial intelligence (genAI) models can relate. They can produce creative and elaborate 3D designs, but when you try to fabricate such [...]

Wed, Feb 25, 2026Source MIT – AI

Qubits, the heart of quantum computers, can change performance in fractions of a second — but until now, scientists couldn’t see it happening. Researchers at NBI have built a real-time monitoring system that tracks these rapid fluctuations about 100 times faster than previous methods. Using fast FPGA-based control hardware, they [...]

Fri, Feb 20, 2026Source Science Daily – Cybernetics

Neuromorphic computers modeled after the human brain can now solve the complex equations behind physics simulations — something once thought possible only with energy-hungry supercomputers. The breakthrough could lead to powerful, low-energy supercomputers while revealing new secrets about how our brains process information. [...]

Sat, Feb 14, 2026Source Science Daily – Cybernetics

Researchers developed an advanced AI system named YORU that can identify specific animal behaviors with over 90% accuracy across multiple species. By combining this high-speed recognition with optogenetics, the team successfully demonstrated the ability to shut down specific brain circuits in real-time using targeted light. [...]

Wed, Feb 11, 2026Source Neuroscience News – Deep Learning

Researchers at the University of Michigan have created an AI system that can interpret brain MRI scans in just seconds, accurately identifying a wide range of neurological conditions and determining which cases need urgent care. Trained on hundreds of thousands of real-world scans along with patient histories, the model achieved [...]

Tue, Feb 10, 2026Source Science Daily – Cybernetics